November 15-21

(ↄ) All rights reversed, all wrongs reserved

Topic 17: The Hacker Approach: Development of Free Licenses

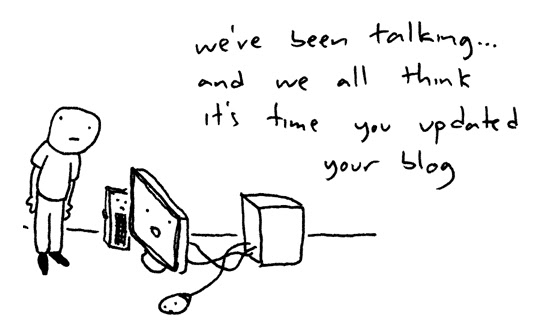

Study the GNU GPL and write a short blog essay about it. You may use the SWOT analysis model (strengths, weaknesses, opportunities, threats).

Topic 17: The Hacker Approach: Development of Free Licenses

Study the GNU GPL and write a short blog essay about it. You may use the SWOT analysis model (strengths, weaknesses, opportunities, threats).

The

GNU General Public License (GNU GPL or simply GPL) is the most widely used free software license, originally written by Richard Stallman for the GNU Project. The

GNU website states: "Free software is a matter of liberty, not price. To understand the concept, you should think of "free" as in "free speech", not as in "free beer"."

The GPL is the first

copyleft license for general use, which means that derived works can only be distributed under the same license terms. While copyright law gives software authors control over copying, distribution and modification of their works, the goal of copyleft is to give all users of the software the freedom to carry out these activities. Under this philosophy, the GPL grants the recipients of a computer program the rights of the free software definition and uses copyleft to ensure the freedoms are preserved, even when the work is changed or added to.

In this way, copyleft licenses are distinct from other types of free software licenses, which do not guarantee that all "downstream" recipients of the program receive these rights, or the source code needed to make them effective. In particular,

permissive free software licenses such as BSD allow re-distributors to remove some or all these rights, and do not require the distribution of source code. While BSD advocates find copyleft restrictive (in regards to the GPL's tendency to absorb BSD licensed code without allowing the original BSD work to benefit from it), some observers believe that the strong copyleft provided by the GPL was crucial to the success of GNU/Linux, giving the programmers who contributed to it the confidence that their work would benefit the whole world and remain free, rather than being exploited by software companies that would not have to give anything back to the community.

Where there is success, there is often criticism. Because any works derived from a copyleft work must themselves be copyleft when distributed, they are said to exhibit a so-called viral phenomenon. Microsoft vice-president Craig Mundie remarked, "This viral aspect of the GPL poses a threat to the intellectual property of any organization making use of it." In another context, Steve Ballmer declared that code released under GPL is useless to the commercial sector (since it can only be used if the resulting surrounding code becomes GPL), describing it thus as "a cancer that attaches itself in an intellectual property sense to everything it touches". On the other side, as seen by K. Lotan in

Efficiency Analysis of the Law and Order of the GPL, many corporations have discovered that they can utilize the open source model for their commercial needs, inter alia, as a marketing and business strategy, where the profit flows from ancillary products and services. Consequently, what began as an ideologically motivated approach broadened into a widespread opportunity for commercial firms to embrace a viable alternative to proprietary software.

Lotan points up the advantages. He writes, "Whether created by a commercial firm, the community, or both, the open source paradigm has numerous advantages. First, it increases the dissemination of information by offering a software product which costs nothing. It avoids users "lock in" problem, a dilemma often associated with proprietary products that, due to the high costs of switching systems, can potentially lock users into staying with one company's system while being charged monopoly prices and subjected to monopoly licensing terms. In this way the open source system creates a threat that nudges proprietary systems into the zone of competition. In addition, the communal method of creation possesses specific advantages that closed systems lack, including more dynamism and a better use of employee skills, along with enhanced quality control." As Eric Raymond (a computer programmer, author of

The Cathedral and the Bazaar, and open source software advocate) states, "Given enough eyeballs, all bugs are shallow."

Challenges in the future

Stallman admits in his

essay about the GNU Project that several challenges make the future of free software uncertain; meeting them will require steadfast effort and endurance. Hardware manufacturers increasingly tend to keep hardware specifications secret. This makes it difficult to write free drivers so that Linux and XFree86 can support new hardware. "We have complete free systems today, but we will not have them tomorrow if we cannot support tomorrow's computers," Stallman writes. Similarly, a nonfree library that runs on free operating systems acts as a trap for free software developers (for example, the nonfree GUI toolkit library, called Qt, used in a substantial collection of free software, the desktop KDE). The library's attractive features are the bait; if you use the library, you fall into the trap, because your program cannot usefully be part of a free operating system. If a program that uses the proprietary library becomes popular, it can lure other unsuspecting programmers into the trap. The worst threat we face comes from software patents, which can put algorithms and features off limits to free software for up to twenty years. The biggest deficiency in our free operating systems is not in the software – it is the lack of good free manuals that we can include in our systems. Documentation is an essential part of any software package; when an important free software package does not come with a good free manual, that is a major gap. We have many such gaps today.

Topic 18: The Millennium Bug in the WIPO Model

Find a good example of the "science business" described above and analyse it as a potential factor in the Digital Divide discussed earlier. Is the proposed connection likely or not? Blog your opinion.

An empirical study

An empirical study published in 2010 showed that of the total output of peer-reviewed articles roughly 20% could be found Openly Accessible. Chemistry (13%) had the lowest overall share of OA* of all scientific fields, Earth Sciences (33%) the highest. In medicine, biochemistry and chemistry gold publishing in OA journals was more common than the author posting of manuscripts in repositories. In all other fields author-posted green copies dominated the picture.

Let's take a look at the field of biotech. Thirty years ago it appeared as if biotech would not only revolutionize healthcare, but also radically improve the very process of R&D itself. This hasn't happened. Though some firms such as Amgen have created dramatic breakthroughs, the overall industry track record is poor – in aggregate, the sector has lost money during this period.

Sean Silverthorne asked in

an interview with Prof Gary P. Pisano, author of the book

Science Business: The Promise, the Reality, and the Future of Biotech, what went wrong. Providing answers, Pisano points to systemic flaws as well as unhealthy tensions between science and business.

- The biotech industry has underperformed expectations, caught in the conflicting objectives and requirements between science and business.

- The industry needs to realign business models, organizational structures, and financing arrangements so they will place greater emphasis on long-term learning over short-term monetization of intellectual property.

- A lesson to managers: Break away from a strategy of doing many narrow deals and focus on fewer but deeper relationships.

According to Pisano, monetization of intellectual property "has worked wonderfully in semiconductors and software, but monetization of IP only works there because of some very specific conditions. You need to have a very modular knowledge base; that is, you need to be able to break up a "big puzzle" into its relatively independent pieces so that a particular piece can be valued independently; and you have to have well defined intellectual property rights. These conditions are pretty well met in industries like semiconductors and software; but they don't characterize at all the state of the science in biotech. So, as a result, we have been pursuing an anatomy that focuses on breaking up the pieces of the puzzle into independent pieces (having lots of small specialized firms) when what matters is the way we integrate the pieces."

Underscoring the tensions between science and business inherent in a science-based business, Pisano explains: "Science and business work differently. They have different cultures, values, and norms. For instance, science holds methods sacred; business cherishes results. Science should be about openness; business is about secrecy. Science demands validity; business requires utility. So, the tensions are deep. What has happened is that we have tried to mash these two worlds together in biotech and may not be doing either very well. Science could be suffering and business certainly is suffering. If you try to take something that is science, and then jam it into normal business institutions, it just doesn't work that well for either science or business."

Therefore, the science business (or the business of science) not only potentially expands the global digital divide, but it sets major limits to R&D in several important industries. To improve the relationship between science and business, Pisano suggests to find partners that truly believe in long-term, committed relationships, not those who are looking to diversify their risks. He also argues that integration matters a lot, which means you have to organize your R&D in a truly integrated fashion.

Also in the EU, experts call for

new approach to research and innovation in Europe.

- Research and innovation policy should focus on our greatest societal challenges.

- New networks, institutions and policies for open innovation should be encouraged.

- Spending on research, education and innovation should be increased, in part through bolder co-investment schemes.

- R&D and innovation programmes should be better coordinated and planned, both at EU level and among the Member States.

- Open competition should be standard in EU programmes.

Science and invention cannot be performed in isolation. Throughout the world, the dominant mode of research and innovation is through open collaboration – among small and large companies, university and industry, public and private sector, clusters and trading blocs. This requires an open environment for knowledge, talent and services to flow, and for critical mass to build where needed.

-----------------------------------------------------------------------

*

Open Access (OA) can be provided in two ways:

- "Green OA" is provided by authors publishing in any journal and then self-archiving their postprints in their institutional repository or on some other OA website. Green OA journal publishers endorse immediate OA self-archiving by their authors.

- "Gold OA" is provided by authors publishing in an open access journal that provides immediate OA to all of its articles on the publisher's website.

The

Directory of Open Access Journals lists a number of peer-reviewed open access journals for browsing and searching.

Open J-Gate is another index of articles published in English language OA journals, peer-reviewed and otherwise, which launched in 2006. Open access articles can also often be found with a web search, using any general search engine or those specialized for the scholarly/scientific literature, such as

OAIster and

Google Scholar.

For the most part, the direct users of research articles are other researchers. Open access helps researchers as readers by opening up access to articles that their libraries do not subscribe to. One of the great beneficiaries of open access may be users in developing countries, where currently some universities find it difficult to pay for subscriptions required to access the most recent journals. All researchers benefit from OA as no library can afford to subscribe to every scientific journal and most can only afford a small fraction of them. Open access extends the reach of research beyond its immediate academic circle. An OA article can be read by anyone – a professional in the field, a researcher in another field, a journalist, a politician or civil servant, or an interested hobbyist.